ISWC 2014 is taking place on the shores of Lake Garda, Italy. However, I won’t have much time to relax on the lake. Look out for my tweets (@gray_alasdair). My conference activities start on Sunday 19 October with the first workshop on Context, Interpretation and Meaning (CIM2014), which together with Harry Halpin (W3C) and Fiona […]

ISWC 2014 is taking place on the shores of Lake Garda, Italy. However, I won’t have much time to relax on the lake. Look out for my tweets (@gray_alasdair).

My conference activities start on Sunday 19 October with the first workshop on Context, Interpretation and Meaning (CIM2014), which together with Harry Halpin (W3C) and Fiona McNeill (Heriot-Watt University) I am a chair. We have managed to put together an interesting selection of 5 papers – two focusing on the context of links, two on the interpretation of alignments and one on the meaning of mappings. I am a co-author on this final paper, but Kerstin Forsberg will be presenting the work [1]. We also have an exciting panel session in store with Aldo Gangemi (CNR), Paul Groth (VU University of Amsterdam) and Harry Halpin.

Also taking place on Sunday is the Linked Science Workshop (LISC). Together with Simon Jupp and James Malone of the EBI we have a paper on modelling the provenance for linksets of convenience [2]. A linkset of convenience is one that does not model the underlying science correctly, but provides a convenient shortcut for linking data. An example from the world of biology is a linkset that directly links genes with their protein product.

On Monday I will be working with the W3C RDF Stream Processing (RSP) Community Group. We have been having regular phone meetings for the last year and have made great progress towards defining a common community model for RDF streams and a query language for processing them. The group will largely be attending the Stream Ordering Workshop and the Semantic Sensor Networks Workshop.

Tuesday is the first day of ISWC, and it is going to be a busy one for me. In the morning I will be presenting the Open PHACTS paper on our work enabling scientific lenses for chemistry data [3]. In the evening I will be at the poster and demonstration session showing off the Open PHACTS VoID Editor [4].

Finally, I am organising the Lightning Talks session on the last day of the conference. This is a session where you can present late breaking results or responses to work presented in the conference. Talks will be 5 minutes each and abstracts can be submitted until 8.30 am on Thursday.

After ISWC I think I’m going to need a break.

[1] S. Hussain, H. Sun, G. B. L. Erturkmen, M. Yuksel, C. Mead, A. J. G. Gray, and K. Forsberg, “A Justification-based Semantic Framework for Representing , Evaluating and Utilizing Terminology Mappings,” in

Context. Interpret. Mean., Riva del Garda, Italy, 2014.

[Bibtex]

@inproceedings{Hussain2014CIM,

abstract = {Use of medical terminologies and mappings across them are consid- ered to be crucial pre-requisites for achieving interoperable eHealth applica- tions. However, experiences from several research projects have demonstrated that the mappings are not enough. Also the context of the mappings is needed to enable interpretation of the meaning of the mappings. Built upon these experi- ences, we introduce a semantic framework for representing, evaluating and uti- lizing terminology mappings together with the context in terms of the justifica- tions for, and the provenance of, the mappings. The framework offers a plat- form for i) performing various mappings strategies, ii) representing terminology mappings together with their provenance information, and iii) enabling termi- nology reasoning for inferring both new and erroneous mappings. We present the results of the introduced framework using the SALUS project where we evaluated the quality of both existing and inferred terminology mappings among standard terminologies.},

address = {Riva del Garda, Italy},

author = {Hussain, Sajjad and Sun, Hong and Erturkmen, Gokce Banu Laleci and Yuksel, Mustafa and Mead, Charles and Gray, Alasdair J G and Forsberg, Kerstin},

booktitle = {Context. Interpret. Mean.},

file = {:Users/Alasdair/Documents/Mendeley Desktop/2014/Hussain et al. - A Justification-based Semantic Framework for Representing , Evaluating and Utilizing Terminology Mappings.pdf:pdf},

title = {{A Justification-based Semantic Framework for Representing , Evaluating and Utilizing Terminology Mappings}},

year = {2014}

}

[2] S. Jupp, J. Malone, and A. J. G. Gray, “Capturing Provenance for a Linkset of Convenience,” in

Proceedings of the 4th Workshop on Linked Science 2014 – Making Sense Out of Data (LISC2014) co-located with the 13th International Semantic Web Conference (ISWC 2014), Riva del Garda, Italy, 2014, pp. 71-75.

[Bibtex]

@inproceedings{Jupp2014,

address = {Riva del Garda, Italy},

author = {Jupp, Simon and Malone, James and Gray, Alasdair J G},

booktitle = {Proceedings of the 4th Workshop on Linked Science 2014 - Making Sense Out of Data (LISC2014)

co-located with the 13th International Semantic Web Conference (ISWC 2014)},

publisher = {CEUR},

month = oct,

volume = {1282},

pages = {71-75},

title = {{Capturing Provenance for a Linkset of Convenience}},

url = {http://ceur-ws.org/Vol-1282/lisc2014_submission_7.pdf},

year = {2014}

}

[3]

![[doi]](http://www.macs.hw.ac.uk/~ajg33/wp-content/plugins/papercite/img/external.png)

C. R. Batchelor, C. Y. A. Brenninkmeijer, C. Chichester, M. Davies, D. Digles, I. Dunlop, C. T. A. Evelo, A. Gaulton, C. A. Goble, A. J. G. Gray, P. T. Groth, L. Harland, K. Karapetyan, A. Loizou, J. P. Overington, S. Pettifer, J. Steele, R. Stevens, V. Tkachenko, A. Waagmeester, A. J. Williams, and E. L. Willighagen, “Scientific Lenses to Support Multiple Views over Linked Chemistry Data,” in

The Semantic Web – ISWC 2014 – 13th International Semantic Web Conference, Riva del Garda, Italy, October 19-23, 2014. Proceedings, Part I, 2014, pp. 98-113.

[Bibtex]

@inproceedings{iswc2014,

author = {Colin R. Batchelor and

Christian Y. A. Brenninkmeijer and

Christine Chichester and

Mark Davies and

Daniela Digles and

Ian Dunlop and

Chris T. A. Evelo and

Anna Gaulton and

Carole A. Goble and

Alasdair J. G. Gray and

Paul T. Groth and

Lee Harland and

Karen Karapetyan and

Antonis Loizou and

John P. Overington and

Steve Pettifer and

Jon Steele and

Robert Stevens and

Valery Tkachenko and

Andra Waagmeester and

Antony J. Williams and

Egon L. Willighagen},

title = {Scientific Lenses to Support Multiple Views over Linked Chemistry

Data},

booktitle = {The Semantic Web - {ISWC} 2014 - 13th International Semantic Web Conference,

Riva del Garda, Italy, October 19-23, 2014. Proceedings, Part {I}},

month = oct,

year = {2014},

pages = {98--113},

url = {http://dx.doi.org/10.1007/978-3-319-11964-9_7},

doi = {10.1007/978-3-319-11964-9_7},

}

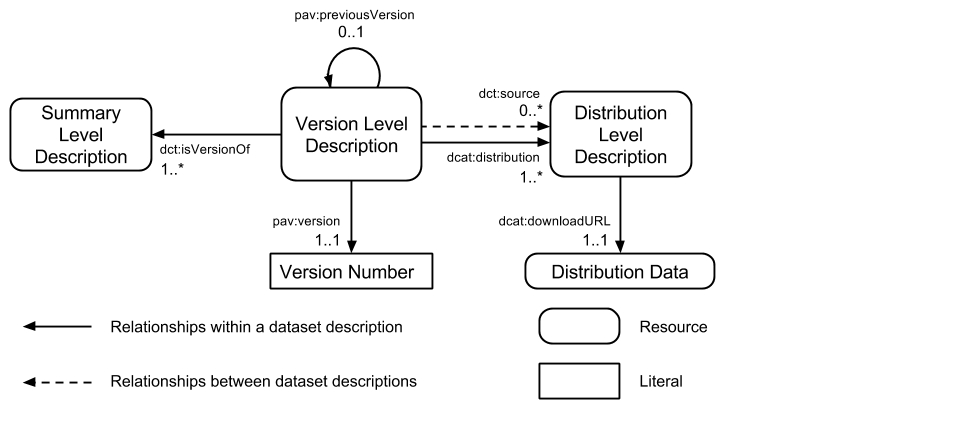

[4] C. Goble, A. J. G. Gray, and E. Tatakis, “Help me describe my data: A demonstration of the Open PHACTS VoID Editor,” in

ISWC 2014 – Poster Demos, Riva del Garda, Italy, 2014, pp. 1-4.

[Bibtex]

@inproceedings{Goble2014,

address = {Riva del Garda, Italy},

author = {Goble, Carole and Gray, Alasdair J G and Tatakis, Eleftherios},

booktitle = {ISWC 2014 – Poster Demos},

month = oct,

pages = {1--4},

title = {{Help me describe my data: A demonstration of the Open PHACTS VoID Editor}},

year = {2014}

}