The ACM Web Conference (the previous WWW) is the most prestigious and oldest conference focusing on the World Wide Web research and technologies. The conference began at CERN in 1994, where the Web was first born and runs annually till 2022. This year, conference management was transferred from the International World Wide Web Conferences Committee (IW3C2) to ACM. I was able to attend the conference as my RQSS paper was accepted in the PhD Symposium track (after the complex problems that occurred during registration :)). It was a real pleasure. The conference was held virtually from Lyon, France. In this post, I write a report of the sessions I’ve been in and interesting points from my own experiences.

Day 1 – Mon 25 April – Graph Learning Workshop and KGTK Tutorial

Monday, April 25 was the first day of the conference. Because I was in Iran during the conference, I was experiencing a 2.5-hour time zone difference, which made it a bit difficult for me to schedule between personal matters and the conference program. On the first day (as well as other days) there were quite interesting tracks that I had to choose because of the overlap. The Web for All track (W4A) was an example that I really wanted to participate in but I couldn’t. However, because my research focuses on the semantic web and knowledge graphs right now, I tried to attend semantic webby sessions.

For the first day, I chose the Graph Learning workshop. The workshop began with a keynote by Wenjie Zhang from the University of New South Wales. The topic of their lecture was the applications and challenges of Structure-based Large-scale Dynamic Heterogeneous Graphs Processing. In this lecture, They mentioned the improvement of efficiency in Graph Learning and having a development model for Graph Learning as the advantages of this method and considered “detecting money laundering” and “efficient suggestion in social groups” as its applications.

In Technical Session 1, I watched an interesting classic Deep Learning approach in Graph Learning called CCGG. A strong team from the high-rank universities in Iran has developed an autoregressive approach for the class-conditional graph generation. The interesting thing for me was that the team is led by Dr Soleymani Baghshah. She had previously been my Algorithm Design lecturer at the Shahid Bahonar University of Kerman. Another interesting point was that one of their evaluation datasets is protein graphs (each protein in the form of a graph of amino acids). I hoped one of the authors would be there live to answer the questions, but it was not. The presentation was also pre-recorded.

I also watched another interesting work on the Heterogeneous Information Network (HIN), called SchemaWalk. The main point of that research is using a schema-aware random walk method. In this method, the desired results can be obtained without worrying about the structure of the heterogeneous graph. The first keynote and this presentation on HIN caught my attention in the field, and I will certainly read more about heterogeneous graphs in the future.

The next interesting work was on Multi-Graph based Multi-Scenario video recommendation. I didn’t get much out of the details of the presentation, but trying to learn from multiple graphs at the same time seemed like an interesting task. Keynote 3 of the workshop was about Graph Neural Networks. Michael Bronstein from the University of Oxford had a nice presentation on how they replaced the common node-vertex perspective (discrete nature) in Graph Learning with a continuous perspective derived from physics and mathematics. I found the blog post here of the study, written by Michel. Overall, the Graph Learning workshop was very dense and quite useful. The topics will be very close to my future research (postdoc possibly), so personally, I’ll return and sit again to review many of these studies soon.

During the day, I visited the KGTK tutorial two or three times. I like their team. They are very active and have been involved in almost every major web and linked-data event in the last couple of years. KGTK tutorials are especially important to me as we are currently preparing to perform several performance and reliability tests on this tool and I am in charge of performing the technical test steps, so the more I know about the tool the better I can run experiments. In this tutorial, a good initial presentation by Hans Chalupsky overviewed the tool. Like other KGTK tutorials, this session was followed by demonstrations of Kypher language capabilities and tool’ Google Collaborate notebooks. The full material of the tutorial can be found in this repository.

The hard part of the first day for me was that my presentation was on the second day and I’ve had the stress and anxiety of that throughout the day. Surely, a considerable part of my focus was consumed on my slides, my notes and my presentation style. I was tweaking them frequently.

Day 2 – Tue 26 April – PhD Symposium

On the second day, I was supposed to present my work at the PhD symposium. I was going to attend the Beyond Facts workshop at the beginning of the day. But I needed to tweak the slides and manage my presentation timing because I was exceeding 15 minutes in the first run! Therefore, I dedicated the second day only to the PhD symposium and I was completely happy with my choice, as 7 of the 10 accepted papers were related to Semantic Web.

The PhD symposium consisted of three main sessions. Knowledge Graphs, SPARQL, and Representation and Interaction. My paper was in the first session in which 22 audiences were present. Before presenting myself, I enjoyed the “Personal Knowledge Graphs” paper. It was an interesting idea. Personal knowledge graphs are small graphs of information that are formed around an individual person. The authors have used these graphs to assist e-learning systems and have been able to make a positive impact on personal recommendations.

Then, it was my turn to speak. My presentation went very well. As I had a lot of practice and edited the slides many times, I had a good dominance in the talk and finished the presentation in exactly 15 minutes. There were 22 listeners when I started. My presentation was the last presentation of the session so my Q&A part was more flexible and took more than three minutes. The chair (Elena) asked me about the number of metrics and why there are such a high number of metrics? I answered that: during the definition phase of the framework, we tried to be as comprehensive as possible, after implementing and reviewing the results, we may want to remove some of the criteria, those that do not provide useful information. Elena also asked if we ever used human participation, questionnaires, or any human opinions data to evaluate quality. I answered that our focus is on objective metrics right now and we do not need to collect human user opinions for them (which facilitate the automaticity of the framework), but we probably will do that in the next steps.

Aleksandr, the author of the first paper of the session, also introduced a tool called Wikidata Complete, which is a kind of relation extraction and linking tool for Wikidata that also can suggest references. I’d need to look at it and see if there are any evaluations of this tool. After finishing my presentation and answering the questions, my duty at the conference has been finished. So I was happy that I could continue the conference with ease of mind.

Following the PhD symposium, at the SPARQL session, Angela gave a keynote address on Query-driven Graph Processing. In this presentation, a complete overview of data graph models, property graphs, RDF graphs and different types of graph patterns used in queries was performed. In this presentation, the concepts of Robotic Queries and Organic Queries were mentioned. Organic queries are those types of queries that humans perform on the KG endpoints. Robotic queries are those queries that are executed in automated tools by scripts, bots, and algorithms. Angela believed that robotic queries have a specific pattern. For example, these queries are on average shorter in length and use fewer variables. At the end of the presentation, there was an interesting discussion about query streaming.

At the SPARQL session, we had another presentation on Predicting SPARQL Queries Dynamics. In this presentation, the authors were working to predict possible changes in the output of a query and even estimate the dynamics of query results based on the variance of changes in the data level. They evaluated their approach on Wikidata and DBPedia.

Day 3 – Wed 27 April – Semantics

I spent the third day attending the Semantics track. The track had two sessions: Semantics: Leveraging KGs and Taxonomy Learning. The first session had problems at the beginning. The first presentation, which was a pre-recorded video, had playback problems and took about ten minutes longer. The second presentation was about learning and not had much about graph-related issues, and its timing was also a bit messy. Other presentations also were related very much to Machine Learning. But the latest presentation “Path Language Modeling over Knowledge Graphs” uses reasoning over KGs to provide explainable recommendations, special for each user. In their experiments, they have used 3 categories of Amazon consumer transaction datasets. They compared their method with 6 baseline approaches, like KGAT and CAFE and in all categories and also for all metrics (Precision, Recall, NDCG, and HR) they obtained the best results compared to baselines.

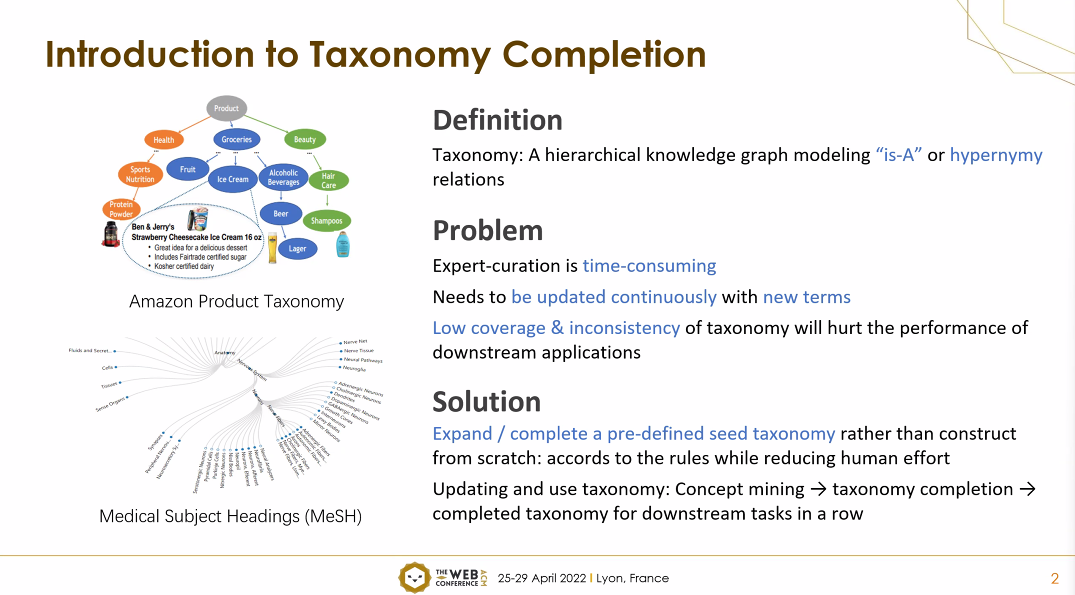

In the second session, Taxonomy Learning, there were two interesting presentations on Taxonomy Completion. It made me search a bit about the Taxonomy Completion task. The task is somehow enriching the existed taxonomies (the hierarchy of things) with rapidly expanding new concepts. In the first presentation, a new framework called Quadruple Evaluation Network(QEN) was introduced that prevents using hardly accessible word vectors for new concepts, instead, benefits from the concept of the Pretrained Language Model which enhances the performance and reduces online computational costs. In the second presentation, the authors created a “Self-Supervised Taxonomy Completion” approach using Entity Extraction Tools from raw texts. For the evaluation, they used Microsoft Academic Graph and WordNet. The notions of the session were a bit out of my knowledge and also my current research focus, but the problems and the solutions that the authors had were quite interesting, so it was added to my To-Read list! Between the two presentations about Taxonomy Completion, there was a nice talk on Explainable Machine Learning on Knowledge Graphs in the presentation of the “EvoLearner” paper.

The afternoon was the opening day ceremony of the web conference, with the introduction of the organizers, the nominees for the best paper awards, and the official transfer of the conference from IW3C2 to ACM. The remarkable thing was how the ceremony was held in a hybrid way. The high discipline of the ceremony and its real-time direction was very remarkable.

Our keynote speaker on Wednesday was Prabhakar Raghavan from Google, and the title of his presentation was “Search Engines: From the Lab to the Engine Room, and Back”. One of the key points was how search engines, including Google, evolved from “keyword” processing and showing just a lot of web links to processing “entities” and finally processing “actions”. In addition to the specific style of presentation and the important content that he expressed, an important part of his presentation was about the quality of information and the problem of misinformation. In this regard, I asked a question related to my research on Wikidata references. Since a lot of information on every search comes in from the KGs, I asked the question: “Does using references and data sources, as Wikidata does, increase the quality and eliminate misinformation?” Prabhakar’s answer was that: references can improve the quality of data and affect the ranking of results, but we do not use them to remove any information. Instead, only policy violations can lead to the deletion of information.

Day 4 – Thu 28 April – Infrastructure

Sessions on the fourth day of the conference were generally about Recommendations. Tracks like UMAP, Search, Industry, WMCA, and Web4Good have had sessions on Recommendations. However, I did not find them very relevant to my research direction. That’s why I attended the Graphs and Infrastructure session of the Industry track. My guess was that these sessions were going to say something interesting about the infrastructure needed to process and manage big graphs, and my prediction was correct.

The first research was about Eviction Prediction in Microsoft Cloud Virtual Machines. In this paper, instead of the current hierarchical Node-Cluster system in predicting, a more efficient node-level prediction method is presented. The best part of this presentation was stating the limitations and plans to overcome them. One of the limitations of this method is that there is a lack of interpretability to use in prediction at the node level. There will also be many parameters to configure compared to traditional methods. To deal with this situation, the authors suggested using a prediction distribution instead of accurate forecasting.

Another paper was about Customer Segmentation using knowledge graphs. I really liked their explanation of the problem. A good explanation at the beginning of a presentation prepares the audiences’ minds to follow the stream and connect complex concepts together correctly. Anyway, traditional methods for Customer Segmentation are still based on questionnaires and domain experts’ opinions. In large datasets that have not been annotated, these methods will be very time-consuming. Instead of traditional methods, the authors used a KG-based clustering and tested their implementation on two customer datasets from the beverage industry.

There was also another interesting paper titled “On Reliability Scores for Knowledge Graphs”. They tried to score the data stored in an industrial KG (about product taxonomy that archives data from different sources automatically) to find only reliable data. The authors used 5 criteria for impact analysis: numerical-based, constraint-based, clustering, anomaly detection and provenance based which the last is very similar to the data quality category “trust” which I’m working on right now. This paper was the closest study to my research during the event.

The last presentation was about DC-GNN, a framework for helping E-Commerce dataset training based on Graph Neural Network (GNN). Using GNN has some benefits for training, for example, the features can be extracted topologically from the data and a relational extraction can be done. However, the huge amount of data will threaten the efficiency of algorithms and their representation of them. DC-GNN enhances the efficiency by separating traditional GNNs-based two-tower CTR prediction paradigms into three simultaneous stage methods.

After the Industry track, I joined the second keynote of the conference titled “Responsible AI: From Principles To Action” presented by Virginia Dignum from Umea University (Sweden). She had a very nice talk on the current situation of AI, the things that AI nowadays can do, like identifying patterns in images, texts and videos, extrapolating the patterns to new data and taking actions based on the patterns, as well as the things AI cannot do yet, like learning from a few examples, common scene reasoning (context and meaning), learning general concepts and combining learning and reasoning from data. She also mentioned the challenges of learning from data like the impact of the wisdom of the crowd, the impact of errors and adversaries, and the impact of biases. The main point of the talk was that we need to consider who should decide about what AI can or should do in the future and which values should be considered and how the values should be prioritized. That was a very nice talk!

Day 5 – Fri 29 April – History of Web

There were some very interesting sessions on the final day of the conference, such as Structured Data and Knowledge Graphs, Graph Search, and Social Web. But I decided to participate in the History of Web track. My reason was that this way I could get a good insight into the history of the Web, the problems that existed in the beginning, and the solutions people had for the problems to be resolved, as well as information about the evolution of the WWW conference itself since the early 90. At first, the title of the track reminded me of a part of Professor Tanenbaum’s book (Computer Networks) first chapter, and also John Naughton’s book “A Brief History of the Future” which delves into the history of the Internet.

The History of Web session consisted of papers and talks that scientifically analyzed Web development during its birthday at CERN. The first presentation, entitled “A Never-Ending Project for Humanity Called the Web”, was presented by Fabien Gandon, which shows the great changes that the Web is examining in human life. The message of this presentation was that despite the profound impact of the Web on information exchange, the Semantic Web, the Web of Things, multimedia, and authentication, much of the potential of the Web has not yet been exploited.

Then we had two guest speakers, George Metakides from the EU and Jean-François Abramatic from INRIA, who shared the history of the W3C in the first ten years on the web.

Another interesting presentation was by Damien Graux, who examined the history of the web through the lens of annual Web Conferences (formerly WWW). This study examined how academia has interacted with web technology in the last 30 years. The paper analyses statistics on the main topics and areas, authors, affiliations of the authors, countries participating in the research, citations of the web conference on other topics, and much other information. This article has historical and scientific value together. I suggest you take a look at it!

After all, I participated in the closing ceremony. Like the other parts of the conference, it was also interesting. In the end, we have been introduced to the next General Chair of The Web Conference 2022, Dr Juan Sequeda who I’m quite familiar from ISWC 2021.

Last words

In the final words, The Web Conference 2022 experience was excellent. The presentations were very interesting and understandable. The timing and discipline of the sessions were great. The chairs did their work very well. The response from the authors was nice and friendly. I liked so many presentation styles and I also learned from the speakers very much. Although the opportunity to be in France and the city of Lyon was very precious and it was not possible due to the online event, participating in the conference alongside my family from Iran made an incredible memory for me. I hope to be able to attend the conference in Austin, Texas next year, and maybe I’ll have something precious to present there 😉