PhD Research

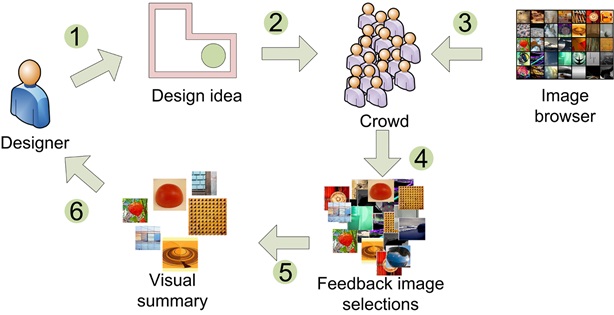

My PhD work was on the CDI (Creativity, Design and Inovation theme) "Head-Crowd" project, an interdisciplinary project in the Schools of Mathematics and Computer Science (MACS) and Textile and Design (TEX). The focus is on perceptual image browsing, visual communication, visual summary and interpretation of visual design feedback. The title is "Crowdsourced Intuitive Visual Design Feedback". I defended my thesis on April 10th, and graduated June 25th 2015.

Visual Crowd Communication

Moodsource: Enabling Perceptual and Emotional Feedback from Crowds

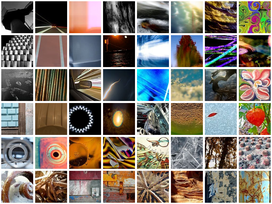

Part of my work on capturing visual feedback has involved building a perceptually relevant image browser populated with a set of abstract images. The images were screen-scraped from Flickr.com (See project acknowledgements below). Human perceptions of the relative similarity of the images were captured using techniques devised by Dr. Fraser Halley ( See his PhD thesis). My thesis can be downloaded from here or just search for it by the title, Crowdsourced intuitive visual design feedback

Abstract Image Set in the SOM Browser

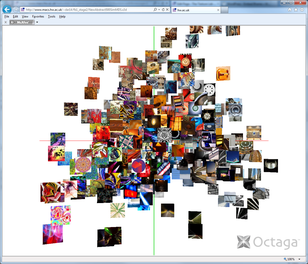

The project abstract image set was viewed in the Self Organising Map Browser.

Images judged by observers as being highly

similar to each other are grouped in stacks together. Adjacent stacks contain images judged more similar to each other than

stacks farther apart. Note how the observers’ similarity judgements and the SOM construction algorithm has resulted in

apparently themed regions in the browser. e.g. architectural at the top right, and highly abstract, colourful at the top

left.

The project abstract image set was viewed in the Self Organising Map Browser.

Images judged by observers as being highly

similar to each other are grouped in stacks together. Adjacent stacks contain images judged more similar to each other than

stacks farther apart. Note how the observers’ similarity judgements and the SOM construction algorithm has resulted in

apparently themed regions in the browser. e.g. architectural at the top right, and highly abstract, colourful at the top

left.

The SOM browser features in the following

publications:

S. Padilla, D. Robb, F. Halley, M. J. Chantler, Browsing Abstract Art by Appearance

Predicting

Perceptions: The 3rd International Conference on Appearance, 17-19 April, 2012, Edinburgh, UK. Conference Proceedings

Publication ISBN: 978-1-4716-6869-2, Pages: 100-103

Download PDF

S. Padilla, F. Halley, D. Robb, M. J. Chantler, Intuitive Large Image Database Browsing using Perceptual Similarity Enriched by Crowds Computer Analysis of Images and Patterns Lecture Notes in Computer Science Volume 8048, 2013, pp 169-176 Springer Link

Abstract Image Set in a 3D MDS Visualisation

View the image set in a 3D view as an animated GIF (8Mb)

The collective similarity judgements of human observers about the images can, perhaps, be better visualised in 3D “similarity” space. The closer an image is to another; the more similar those images were judged to be by observers. Conversely, the farther away two images are, the less similar they were judged to be. The similarity data was used as input to construct the SOM browser.

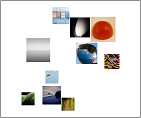

Perceptually Relevant Image Summaries

If a crowd were asked to give feedback by selecting images from the browser the gathered image selections might be so large in number as to overwhelm a designer seeking the feedback. To address this problem we developed an algorithm to generate visual summaries consisting of representative images. The algorithm uses clustering based on the image selections and the human perceptual similarity data previously gathered on the image set. (This is the same data that is used to organise the abstract image browser). The algorithm is described in the CHI'15 paper.

If a crowd were asked to give feedback by selecting images from the browser the gathered image selections might be so large in number as to overwhelm a designer seeking the feedback. To address this problem we developed an algorithm to generate visual summaries consisting of representative images. The algorithm uses clustering based on the image selections and the human perceptual similarity data previously gathered on the image set. (This is the same data that is used to organise the abstract image browser). The algorithm is described in the CHI'15 paper.

The image summarisation also features in the CSCW'15 extended abstract and additionally in following publications:

B. Kalkreuter and D. Robb. HeadCrowd: visual feedback for design in the Nordic Textile Journal, Special edition: Sustainability & Innovation in the Fashion Field, issue 1/2012, ISSN 1404-2487, CTF Publishing, Borås, Sweden. Pages 70-81 Download PDF

B. Kalkreuter, D. Robb, S. Padilla, M. J. Chantler Managing Creative Conversations Between Designers and Consumers Future Scan 2: Collective Voices, Association of Fashion and Textile Courses Conference Proceedings 2013, ISBN 978-1-907382-64-2, Pages 90-99 Download PDF

Communication Experiment

To show that communication is possible using the crowdsourced visual feedback method (CVFM) we carried out an experiment in which a group of participants were shown terms and asked to choose images from the abstract image browser to represent those terms. Summaries were made from the gathered images. The raw term image selections and the summaries were shown to another group of participants who rated the degree to which they could see the meaning of the terms in the stimuli they were shown. The term weights output by the second group of participants allowed the effectiveness of the communication and of the summarisation to be measured.

To show that communication is possible using the crowdsourced visual feedback method (CVFM) we carried out an experiment in which a group of participants were shown terms and asked to choose images from the abstract image browser to represent those terms. Summaries were made from the gathered images. The raw term image selections and the summaries were shown to another group of participants who rated the degree to which they could see the meaning of the terms in the stimuli they were shown. The term weights output by the second group of participants allowed the effectiveness of the communication and of the summarisation to be measured.

A video available at the ACM digital library describes the experiment which features in the DIS’16 paper in the publications page. The terms, the raw term image selection lists, and the algorithmically generated summaries from the experiment can be viewed using this viewer web application: The Fb Viewer (V2) was designed to be tablet (and 'fablet') friendly but was also created before our web server here changed to HTTPS

and it made use of some PHP features which servers running HTTPS often inhibit. If you find that it malfunctions when you try it you might overcome that by, in yor browser addresss bar, removing the s from the https to cause the server to serve in http instead. That should allow my PHP legacy code from 2014 to function.

If you find the previous link which was for a mobile friendly version does not work you can instead try the

version designed for desktops and laptops: desktop version.

A video available at the ACM digital library describes the experiment which features in the DIS’16 paper in the publications page. The terms, the raw term image selection lists, and the algorithmically generated summaries from the experiment can be viewed using this viewer web application: The Fb Viewer (V2) was designed to be tablet (and 'fablet') friendly but was also created before our web server here changed to HTTPS

and it made use of some PHP features which servers running HTTPS often inhibit. If you find that it malfunctions when you try it you might overcome that by, in yor browser addresss bar, removing the s from the https to cause the server to serve in http instead. That should allow my PHP legacy code from 2014 to function.

If you find the previous link which was for a mobile friendly version does not work you can instead try the

version designed for desktops and laptops: desktop version.

Emotive Image Browser

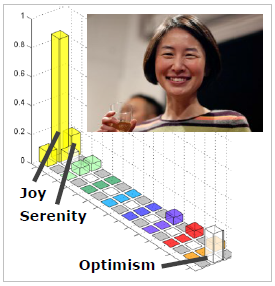

To allow more figurative communication, a second browser was built. 2000 images were categorized by tagging them with terms from an emotion model. Thus every image has a normalized emotion tag frequency profile (see image above) representing the judgments of 20 paid, crowdsourced, participants.

To allow more figurative communication, a second browser was built. 2000 images were categorized by tagging them with terms from an emotion model. Thus every image has a normalized emotion tag frequency profile (see image above) representing the judgments of 20 paid, crowdsourced, participants.

Using these profiles, the set was filtered to 204 images covering a subset of emotions suited to design conversation. The emotive images are arranged in a SOM browser defined by the emotion profiles (frequency vectors) in a similar way to the abstract browser (based on similarity vectors).

The emotion image browser features in the CHI'15 paper and the CSCW'15 extended abstract.

Evaluation

The crowdsourced visual feedback method (CVFM) was evaluated in a study with interior design students puting forward their designs for feedback and a group of student participants acting as the crowd giving feedback. The crowd were shown the designs in a random order and asked "How did the design make you feel?". They were asked to give thier feedback in the form of abstract images, emotive images and text. The CHI'15 paper reports the designer side of the evaluation and the CSCW'15 extended abstract reports the crowd side. Later, after completing the PhD, I had the opportunity to research further the crowd side and this is reported in a DIS'17 paper.

Acknowledgments

Image sets:-

The project has established two databases of images for use in visual feedback. The images in the databases have been sourced from Google and Flickr and all have a Creative Commons licences. These contributors are acknowledged below.

Acknowledgement of Creative Commons images

The databases can be downloaded from here

References

Olesya Blazhenkova and Maria Kozhevnikov. 2009. The new object-spatial-verbal cognitive style model: Theory and measurement. Applied Cognitive Psychology, 23(5), 638-663.Search for this paper

MSc

I did my MSc here at Heriot-Watt. I made a rich web application called The Dendrogrammer as part of my dissertation. Here is a link to a demo of that app.