|

This page summarises some of my research themes - see those on narrative, agents and affect, and projects for more details.

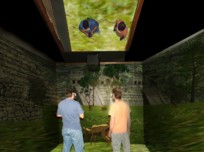

Intelligent Virtual Environments

This field can be defined as the overlap of interactive real-time 3D graphics and artificial intelligence. There are a number of reasons why this overlap is important. One is that while the direct manipulation metaphor of interactive graphics is a very powerful one, in many such environments sensory engagement only takes the user so far. This could be summed up in some virtual environments by the phrase "very pretty, but what is it?". A virtual model of an RAF Tornedo can only be used to support a deeper engagement if knowledge about its components, their interrelationship and their behaviour is available to the user. Ontology engineering is a vital issue here, as is the embedding and propagating of knowledge-based constraints and sequencing technologies such as intelligent planning and scheduling.

Bringing together AI and VR in this way raises some serious architectural issues. There is a tendency to embed added knowledge or other intelligent technologies directly into the visualisation system, sometimes via a scripting system, without thinking about the impact this may have on reusability, especially where the visualisation system is proprietary, as for example in computer games engines. In the ELVIS project we were working to establish the concept of a more decoupled architecture, in which a symbolic world model is the central component, and the graphical components are seen as visualisers for what happens in that model. Apart from supporting reusability, this also makes it possible to use different visualisation technologies - 2D, 3D, Augmented reality - with the same knowledge and behaviour, depending on factors such as the requirements of the user, the availability of specialised hardware and the use to which it is being put.

Synthetic Characters

Synthetic characters are embodied agents interacting with a 3D virtual world in real-time: talking heads, flocks of autonomous animals, crowds, tutors and guides, and all kinds of virtual actors. This raises different problems from those dealt with in software agents: virtual sensing, convincing physical movement, expressive behaviour, including language, facial expression, posture and gesture, and all the issues associated with believability. It is not always recognised by graphics researchers, who are often interested in photo-realism, that believability is not at all the same thing as naturalism. In fact Mori's concept of the 'uncanny valley' suggests that the high expectations aroused by very naturalistic-looking characters may cause very negative reactions when those expectations are inevitably disappointed by some small inconsistency. We have explored some of these issues in the EU FP5 project VICTEC - Virtual ICT with Empathic Characters and the EU FP6 project eCIRCUS.

Synthetic characters form a testbed for many of the research issues also investigated in robotics - action selection, cognitive and biologically-inspired architectures, social interaction. They also have a multitude of applications, from education and training to fronting e-commerce web sites. More than this, they are a strategic component in pervasive computing.

As computer power is distributed into intelligent environments, both indoors and outdoors, new interfaces will be required. Adaptation works well for the short-term adjustment of an intelligent environment to its users, but over longer time-scales the power of such environments can only be harnessed by strategic interfaces which can be directed naturally by the humans within them. The ability to tell a smart house that you are going on holiday for two weeks for example, or a smart office building that a VIP is coming to open a new facility. Synthetic characters offer just such an interface

Planning and reacting; feeling and thinking

Any agent that has to live in a world - real or virtual - not wholly under its control, has to be able to 'do the right thing' in Patti Maes' phrase [1]. In the earlier days of AI, this was thought of as being an ability to plan sequences of actions that would hang together logically to get from an initial situation to a desired goal. The work of Brooks [2], Maes, Suchman [3], Agre[4] and a whole set of other researchers into situated action in the 1980s pointed out that the assumptions of classical planning - an accurate model of the world, no external change - were seldom met and that the result was agents that on the whole did not do the right thing.

The response of these researchers was a set of behavioural architectures, in which tight sensor-actuator couplings produced a reactive system with little or no internal state. 'The world is its own best model' was the slogan Brooks advanced, and local decision-making was used to make agents responsive to their world.

Of course the existence of local minima, the problem of resolving conflicting behaviours, and a lack of flexability were all objections to wholly behavioural systems. We were among a number of groups who produced hetereogenuous architectures [5] [6] [7] - in our case, in the MACTA project, an AI planner was coupled to multiple behaviourally-driven robots such that the planner provides contextual switching of groups of behaviour patterns, allowing the robots to use an appropriate set for their current goals in a behaviour synthesis system.

While MACTA produced a solution linking a planner to a behavioural system downwards, it is clear that it is equally necessary to link these systems upwards, so that planning - or replanning - is invoked when the behavioural systems fail or are deadlocked. One role of affective systems in animals seems to be in helping to regulate behaviours, and so it would be interesting to see if emotions such as frustration, fear, and anger could be used to produce this upwards connection. The PSI model of Dorner is one candidate for such a connecting framework.

It is also clear that affective systems have a role to play at the upper 'cognitive' planning level, whether in goal management, possibly via motivations, or in acting as heuristucs in the search of large plan-spaces. Influenced, like many others, by Damasio's remarks on the links between affect and cognition, I hope to incorporate both low-level and high-level affective systems into a heterogeneous architecture working at both cognitive and behavioural levels. My hypothesis is that this will lay the basis for the sort of functionality that synthetic characters - and robots - require to 'do the right thing' in their worlds.

References

[1] P. Maes (1990)"How to do the Right Thing",Connection Science Journal, Special Issue on Hybrid Systems",v1

[2] Brooks, R. A. (1999) Cambrian Intelligence The Early History of the New AI

MIt Press.

[3] Suchman, L.A. (1987). Plans and Situated Actions: The Problem of Human-Machine Communication. Cambridge: Cambridge Press.

[4] Agre, P. E. and Chapman, D. (1987). Pengi: An implementation of a theory of activity. In Proceedings of The Sixth National Conference on Arti cial Intelligence (AAAI-87), pages 268-272, Seattle, WA, USA. AAAI.

[5](pdf) Barnes D.P., Ghanea-Hercock R.A., Aylett R.S., and Coddington A., Many hands make light work? An investigation into behaviourally controlled co-operant autonomous mobile robots. In Proc. 1st International Conference on Autonomous Agents. Marina del Rey, pp. 413 - 420, February 1997

[6] Arkin, Ronald C. 1989.Towards the Unification of Navigational Planning and Reactive Control. Working Notes of the AAAI Spring Symposium on Robot Navigation. Stanford University, March 28-30, 1989

[7] Bonasso, R. Peter and David Kortenkamp, 1996. Using a Layered Control Architecture to Alleviate Planning with Incomplete Information.

|